Why everyone's wrong about Apple's $999 monitor stand

You can't beat an Apple keynote for getting people riled. If there's not something in there that makes someone utterly furious then Apple's probably not doing its job properly. So it's no surprise that this week's WWDC 2019 reveal of the new Mac Pro has provoked a torrent of online scorn; not for the Mac Pro itself, but for the Pro Stand for its swish new Pro Display XDR monitor, which comes separately for $999.

What? $999 for a monitor stand? Is Apple stupid? LOLZ!!!

Clearly we're into uncharted territory here. You buy a monitor, you kind of expect the stand to come with, right? And you absolutely, definitely don't expect to be stung for a grand.

And so the big takeaway from WWDC seems to be that Apple's completely lost the plot with its $999 monitor stand. Because seriously, what sort of idiot's going to pay that sort of money for something you should get thrown in for free?

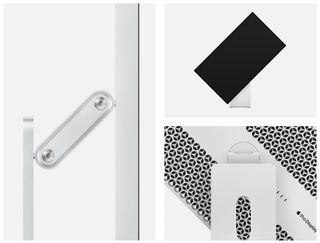

The Pro Stand does all this, but is it really worth a grand? [Image: Apple]

Well, hold your horses for just one second. For starters, Apple clearly isn't stupid. It does very nicely indeed out of high-end kit where you pay a premium for the Apple badge and the slick design. Everyone knows that for the price of the average iMac or MacBook you can buy one of the best computers for graphic design that are a whole lot more powerful but some of which look like they've been hit with a sack of ugly spanners, and for many people that distinction makes it well worth paying the additional Apple tax.

So we have no doubt whatsoever that a healthy chunk of that $999 Pro Stand price tag is pure profit aimed straight at the top of Apple's ever-growing cash mountain. But we also have no doubt that Apple has done its sums and its research and decided that this is the right price for the Pro Stand.

Built to perform

When you look at what the Pro Stand actually does, it becomes apparent that this is a serious piece of kit that's built to perform. It's described as making the seven-and-a-half kilo Pro Display XDR feel weightless, it enables you to adjust the height and tilt it effortlessly, and it leaves the screen absolutely stable once it's in place. We're not engineers, but that sounds like quite an achievement to us, especially when you factor in that as well as doing all that, the Pro Stand has to be utterly reliable and built to last – after all, it's the one thing stopping your $5000 monitor smashing onto the desk.

So, we suspect that, as with so much other Apple kit in the past, once the Pro Stand's out there and people get to play with it, they're going to love it. And they're probably going to go on and on about it. The bastards.

Still, though, selling a $5,000 monitor that doesn't have a stand at all feels like a bit of a misfire. If you're not sold on the Pro Stand then there is of course another option: the $199 VESA mount that you can use to attach your Pro Display XDR to the wall mount or desk stand of your choice. We've had a bit of a look around, though, and there doesn't seem to be any sort of VESA stand that looks as good or works anything like as nicely as the Pro Stand does.

Of course, what Apple could have done is ship the XDR with a basic stand like the one on an iMac, with the Pro Stand as an optional extra. But hey, that's Apple; we suspect that this would be an inelegant solution in its view.

Selling a monitor without a stand seems like madness, but if Apple included the Pro Stand and bumped the XDR's price by $1,000, anyone who instead wanted to wall-mount their monitor – which, given that a lot of Mac Pros are going to end up in editing suites and the like, could be quite a proportion of the market – would be rightly annoyed at being charged for an unwanted high-end stand.

If your workspace doesn't look like this then $12,000 of new Apple hardware probably isn't for you [Image: Apple]

And ultimately, it seems that most of the people complaining about the Pro Stand and its price aren't actually the people who are going to be buying it. The new Mac Pro isn't for the average creative, it's for serious video and film production companies and the like, and while it's clearly expensive for what it is, it's also clearly going to find a market, with all the expensive extras and complete with the Pro Display XDR and its $999 stand, because it's Apple and because it does exactly what these high-end studios need while looking fantastic. Suck it down, haters.